Hi! Welcome back! In this new post, I will show you how to connect your Scala application to a LeanXcale database using the well-known JDBC library. I will also show you how to connect with LeanXcale using the direct Java API from Scala.

Scala is a relatively new programming language created by Martin Odersky that combines object-oriented and functional programming in one concise, high-level language. It is commonly used in Big Data applications because it is one of the languages supported by Spark, which is one of the most used frameworks for Big Data computing.

Thanks to Scala’s Java interoperability, Scala is compatible with Java. The standard Scala backend is a JVM. This means that Scala classes are Java classes, even though there are some limitations in a few cases. Due to this, however, we can use not only the LeanXcale JDBC driver but also the Java API.

PREREQUISITES

To execute the steps laid out in this post, you will need to meet several prerequisites. As a working environment, I am using IntelliJ IDEA for Scala development, since it facilitates some aspects of sbt dependency management.

I am also using an on-premise LeanXcale installation. If you want to use LeanXcale to follow this post, you can request a free trial through the following link.

For the client connection libraries, you will need the LeanXcale JDBC driver and the Java KiVi Direct API library. You can download it from our webpage.

DEVELOPMENT

Since the purpose of this post is to show you how to interact with LeanXcale from Scala, I will import some data and then perform some analytical queries using both the JDBC driver and the direct Java API. The dataset I am going to use is the well-known Boston Housing dataset.

DATASET AND TABLE CREATION

This dataset was collected in 1978 and consists of aggregated data concerning houses in different suburbs of Boston, with a total of 506 cases and 14 columns. According to the Boston Housing Dataset website, we can define each of the 14 variables as follows:

- CRIM – per capita crime rate by town

- ZN – proportion of residential land zoned for lots over 25,000 sq. ft.

- INDUS – proportion of non-retail business acres per town

- CHAS – Charles River dummy variable (1 if tract bounds river; 0 otherwise)

- NOX – nitric oxides concentration (parts per 10 million)

- RM – average number of rooms per dwelling

- AGE – proportion of owner-occupied units built prior to 1940

- DIS – weighted distances to five Boston employment centers

- RAD – index of accessibility to radial highways

- TAX – full-value property-tax rate per $10,000

- PTRATIO – pupil-teacher ratio by town

- B – 1000(Bk – 0.63)^2 where Bk is the proportion of blacks by town

- LSTAT – % lower status of the population

- MEDV – median value of owner-occupied homes in $1000’s

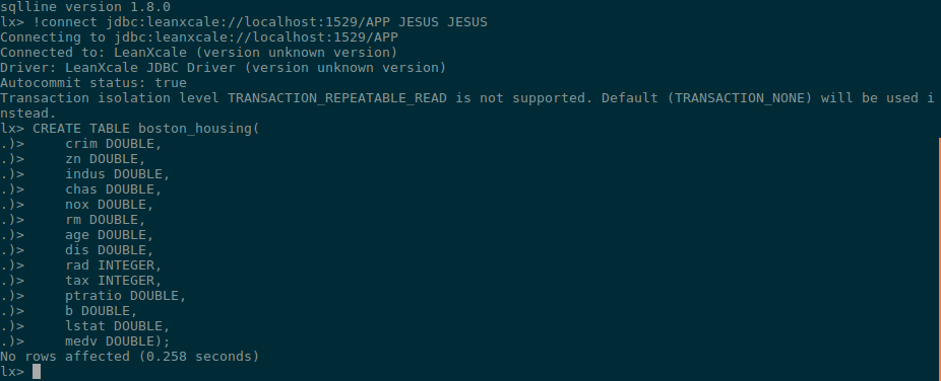

To reflect this data in our database, we must recreate the schema formed in this case in one single table, which I will call boston_housing. In the project code, you can find the SQL create script. To create the table, I will use LeanXcale’s SQL client provided with the client package.

DEPENDENCY MANAGEMENT

To add dependencies to our Scala project, we have two alternatives. In the first one, which I am following, sbt manages the dependencies following the specifications from the build.sbt file. To load the dependencies needed from LeanXcale, I add them to the libraryDependencies configuration and specify the Maven repository where these dependencies are located so sbt knows where to find them.

name := "scalaJDBC" version := lxVersion scalaVersion := "2.12.6" val lxVersion = "1.6-RC.5" //resolvers += "Local Maven Repository" at "file://"+Path.userHome.absolutePath+"/.m2/repository" resolvers += "Leanxcale Nexus Repository" at "https://nexus.leanxcale.com/repository/maven-releases" // Change this to another test framework if you prefer libraryDependencies += "org.scalatest" %% "scalatest" % "3.0.5" % "test" libraryDependencies += "com.leanxcale" % "kivi-api" % lxVersion classifier "jar-with-dependencies" libraryDependencies += "com.leanxcale" % "qe-driver" % lxVersion classifier "jar-with-dependencies"

The second alternative is to use unmanaged dependencies, which might be simpler if you are new to all this. For this method, you need to create a lib folder and put the dependencies needed for this project inside it. In this case, you would need to add the LeanXcale JDBC driver and the direct Java API.

No matter which option you choose, if you build the application with sbt, it will package the required dependencies for you.

DATABASE CONNECTION

Once we have imported the dependency, we need to connect with LeanXcale using JDBC. To do this, we just need to define a few properties such as the connection string and the credentials so we can access our database and create the connection using the DriverManager.

//JDBC Initialization val driver = "com.leanxcale.client.Driver" val url = "jdbc:leanxcale://localhost:1522/APP" val username = "JESUS" val password = "JESUS" var connection:Connection = null Class.forName(driver) connection = DriverManager.getConnection(url, username, password)

To connect to the database using the direct Java API, we must define some settings and create a new session using the connection string as provided in the code. We can interact with the database using this session object.

//Kivi Api initilization

val settings: Settings = Settings.parse("lx://localhost:9876/APP@JESUS")

var session: Session = null

var sessionImpl: SessionImpl = null

session = SessionFactory.newSession(settings)

LOADING PHASE

Massive loading is one of the principal advantages of the direct Java API because you can get high insertion rates without paying the overhead that SQL requires. You can see how LeanXcale performs using the direct KiVi interface in this benchmark against DynamoDB. Thus, LeanXcale offers the flexibility to load large amounts of data using the direct Java API and then use advanced analytics SQL queries, taking advantage of the maturity of SQL language.

In this example, I will show you how to perform insertions in LeanXcale using both JDBC and direct Java API, although it is important to know the pros and cons of each accessing method.

To insert data using JDBC, we will use a preparedStatement and execute the statement for each row of the csv. There are several things to consider when you are performing inserts using JDBC that, for the sake of simplicity, we will not worry about for our scenario. However, they can make a big difference in a real-life scenario.

The first thing to consider is autocommit. By default, the autocommit property is set to true in the LeanXcale JDBC driver. However, this might be very inefficient if you are executing massive loads. It is much more convenient to manage the commit operation and make only one commit per ten or twenty thousand inserts rather than pay the cost of one commit operation per insert.

The second thing to consider is JDBC batching. Instead of executing each operation, we can batch in the JDBC client and, after some insert operations, we can execute the batch stored in the statement.

def loadTableFromCsvJDBC(connection: Connection): Unit ={

val statement = connection.prepareStatement("INSERT INTO JESUS.boston_housing values

(?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)")

val bufferedSource = io.Source.fromFile("data/BostonHousing.csv")

var columns = new ListBuffer[String]()

for (line <- bufferedSource.getLines.drop(1)){

val words = line.split(",").map(_.trim)

statement.setDouble(1,words(0).toDouble)

statement.setDouble(2,words(1).toDouble)

statement.setDouble(3,words(2).toDouble)

statement.setDouble(4,(words(3).substring(1, words(3).length()-1)).toDouble)

statement.setDouble(5,words(4).toDouble)

statement.setDouble(6,words(5).toDouble)

statement.setDouble(7,words(6).toDouble)

statement.setDouble(8,words(7).toDouble)

statement.setDouble(9,words(8).toInt)

statement.setDouble(10,words(9).toInt)

statement.setDouble(11,words(10).toDouble)

statement.setDouble(12,words(11).toDouble)

statement.setDouble(13,words(12).toDouble)

statement.setDouble(14,words(13).toDouble)

statement.executeUpdate()

}

}

However, if we want to use the direct Java API, the first thing we need to start is a transaction from the session object already created. Within this transaction, we can interact with the database to afterward commit or rollback the changes. In the same way as before, we iterate through every line in the csv file and fill tuples with the data. By using tuples, we can insert data through this API. Once we have created each tuple from the table in question, filled each field with data from the schema, and inserted it into the table, we can commit the transaction.

When using the Java API, we are responsible for managing the transactions, so we have to commit the transaction ourselves, taking care of the previously mentioned issue with the autocommit.

def loadTableFromCsvKIVIAPI(session: Session, columns:Array[String]): Unit ={

var database = session.database()

session.beginTransaction()

var table = database.getTable("boston_housing")

val bufferedSource = io.Source.fromFile("data/BostonHousing.csv")

for (line <- bufferedSource.getLines.drop(1)) {

val words = line.split(",").map(_.trim)

var tpl:Tuple = table.createTuple()

tpl.putDouble(columns(0), words(0).toDouble)

tpl.putDouble(columns(1), words(1).toDouble)

tpl.putDouble(columns(2), words(2).toDouble)

tpl.putDouble(columns(3), (words(3).substring(1, words(3).length()-1)).toDouble)

tpl.putDouble(columns(4), words(4).toDouble)

tpl.putDouble(columns(5), words(5).toDouble)

tpl.putDouble(columns(6), words(6).toDouble)

tpl.putDouble(columns(7), words(7).toDouble)

tpl.putInteger(columns(8), words(8).toInt)

tpl.putInteger(columns(9), words(9).toInt)

tpl.putDouble(columns(10), words(10).toDouble)

tpl.putDouble(columns(11), words(11).toDouble)

tpl.putDouble(columns(12), words(12).toDouble)

tpl.putDouble(columns(13), words(13).toDouble)

table.insert(tpl)

}

session.commit()

}

ANALYTICAL QUERIES

Once the data is loaded into the table, I will perform some basic analytical queries to demonstrate the functioning and show how easily we can construct more complex queries in both JDBC and the Java API.

To remain within the scope of this post, I will just execute a full scan and some basic aggregation such as the max, min, and mean over the target column—the house pricing “MEDV.”

In JDBC, it is enough to simply execute the SQL query using a statement and retrieve the desired data from the resultset.

//Data Exploration

//Min, max, mean on MEDV

val statementStats = connection.createStatement()

val statsRS = statementStats.executeQuery("SELECT MIN(MEDV) AS MIN_PRICE, MAX(MEDV) AS " +

"MAX_PRICE, AVG(MEDV) AS MEAN_PRICE FROM JESUS.boston_housing")

while(statsRS.next()){

val max = statsRS.getDouble("MAX_PRICE")

val min = statsRS.getDouble("MIN_PRICE")

val mean = statsRS.getDouble("MEAN_PRICE")

println(s"Using JDBC: MEDV stats: MAX $max, MIN $min, MEAN $mean")

}

statementStats.close()

However, to calculate those aggregates using the direct Java API, we will need to create the tuple iterator objects, which are responsible for performing the scans with the appropriate requirements, such as filters or aggregations.

val aggList:java.util.List[String] = new util.ArrayList[String]()

val tplItermax:TupleIterable = table.find().aggregate(aggList, max("max",new

KiviFieldExpression("MEDV")))

val iterMax = tplItermax.iterator()

val tplItermin:TupleIterable = table.find().aggregate(aggList, min("min",new

KiviFieldExpression("MEDV")))

val iterMin = tplItermin.iterator()

val tplItermean:TupleIterable = table.find().aggregate(aggList, avg("avg",new

KiviFieldExpression("MEDV")))

val iterMean = tplItermean.iterator()

For the aggregations in this example, we can use a list of the names of the columns we want to group by (none, in our case) and then specify the aggregation function to use alongside an alias for the returned column and the field to compute the aggregation function.

To finish the scan, we can iterate the result and get the value from the tuple returned. Finally, we need to close the tuple iterables and the session with the database.

while(iterMax.hasNext){

val tpl = iterMax.next()

maxValue = tpl.getDouble("max")

}

while(iterMin.hasNext){

val tpl = iterMin.next()

minValue = tpl.getDouble("min")

}

while(iterMean.hasNext){

val tpl = iterMean.next()

meanValue = tpl.getDouble("avg")

}

tplIterable.close()

tplItermax.close()

tplItermin.close()

tplItermean.close()

session.close()

CONCLUSION

Now that you have read this post and hopefully completed all the steps, you know how to integrate a Scala application with a LeanXcale database, using two different access methods: LeanXcale JDBC driver and direct Java API. You have also learned how to load the required dependencies in our project using sbt and insert some data using both methods, and you understand the benefits of using the direct API for massive insertions. Last but not least, you have performed some example queries using JDBC and Java API.

Happy coding!

WRITTEN BY

Jesus Manuel Gallego Romero

Software Engineer at LeanXcale

jesus.gallego@leanxcale.com

https://www.linkedin.com/in/jes%C3%BAs-manuel-gallego-romero-68a430134/